The Reasoning Engine

Intelligence got cheaper

In the world of software development, we’re accustomed to connect many components together to build complex systems, those components have been stable for a while: you have a database, which is your storage engine for transactional data, you have a queue, to transport data reliably and at scale to different parts of the system, you have load balancers and CDNs, and other 200+ AWS services at your disposal. However, never before we had a component for reasoning, an engine where we throw data at it, it reasons for a bit, and it gives an answer out. Now we do.

Some may argue that what LLMs do is not really reasoning, even so, they definitively pushed the boundaries of what is possible to automate. As we automated away mechanical and repetitive tasks, we moved more and more to become knowledge workers, doing non-repetitive work or at least very flexible types of work, which cannot be described by a formula to be automated, or at least it is not worth to do so. Now, for the first time, we can also automate tasks that require knowledge and intelligence, and that are somewhat non-repetitive, tasks that we always said, required reasoning.

For the first time we can, as part of our software, have a function call to execute a task with the specification of the task in natural human language, a task that requires some level of intelligence to be executed, and have the computer to carry it over for you instead of a fellow co-worker.

Let’s take the example of a retail ecommerce, which has a catalog of over 40 thousand items from various suppliers, adding a special “playful and funny” branding on top of it. To have this branding throughout the whole store, the ecommerce needs to rewrite the description given by the suppliers of each and every item. To do that, the ecommerce pays a wholesome amount to an agency, which has an army of humans rewriting those products in the business tone of voice. This costs millions per year for the business, but there is no other way, rewriting requires a level of intelligence. Turns out, rewriting with a different tone of voice can now be automated with LLMs, the cost can be brought down from a few hundred dollars per item, to cents. Even if there is a trade-off in quality, it has to be huge to cover the difference in price, and as LLMs get smarter, the gap is definitively shrinking. You can now execute tasks that requires intelligence for a cheaper price. Intelligence got cheaper.

Humans need not to be displaced for the reasoning engine to add value either, automation is probably the most cliché example, but there is actually much more to be gained in the accretion of new ideas, tiny gaps that requires intelligence, but would not be carried unless really cheap to do so.

For example, consider an online travel agency selling tickets to attractions and things to do around the world, and it wants to help travelers to have fun even if they got unlucky with the weather, under a heavy rain for vacations. They don’t have the information of which things would be fun to do in bad weather conditions, they could ask the suppliers, but suppliers may have quite biased opinions of themselves. They could crowdsource it from their community, but it could take years until they have a decent amount of data worldwide to give out recommendations. They could ask their own travel experts and employees, or hire an agency to mark each attraction as “good for heavy rain” or not, but if they have a hundred thousand products, the task becomes incredibly boring and cost prohibitive for such a minor feature. There is no return over investment, so this feature would never happen. Guess what, LLMs have the world knowledge compared to a travel expert, they can be good estimators if an attraction is good for rainy days or not, and can carry over the task for a modest sum — suddenly, this feature becomes ROI positive and pops into existence.

Now where does traditional Machine Learning fit into this? This seems like a task that could be easily carried over by a classification model, and moreover, isn’t ML already this engine capable of more than just the logical processing of traditional software? Well, yes and no. Sure, it can carry over a task that requires some intelligence, but it doesn’t reason about the task itself, it just mindlessly executes this one thing. This means that for this specific example, unless there is some off-the-shelf attractions-to-rain classification model out there, then there would be also a high cost associated with hiring data scientists to develop, train and run a specific model for your business, with the timelines also measured in months, making it still cost prohibitive.

Those examples are just scratching the surface, considering the abstraction of having a Reasoning Engine anywhere can take software much further, think of having a system that dynamically integrates with any other system on the fly, by simply generating the API integrations as users need them, or an exception handler that can auto-recover by understanding the cause of issue and patching the data to get it to pass through, maybe UIs generated on the fly for user amusement, and a supervision tree agent that quarantines and review all actions executed by the thousands of mini automated agents across the system, reporting to the human in charge. The possibilities are much larger than my creativity can contain.

Sharp Decline of Cost

Two biggest blockers for having Reasoning Engines more and more ingrained in our software are quality and cost. Quality is where the biggest challenge and most popular race lies of course, however, even given lower quality models, we can play into computers advantages, which is being easily scalable and never getting tired. Take software development for example, even GPT-4 is often not better than Junior developers, however, there is no reason why can’t we employ an army of a 1000 almost-junior LLM developers to work 24/7 until they crack a problem, the main limiting factor right now is cost, and this has been in sharp decline.

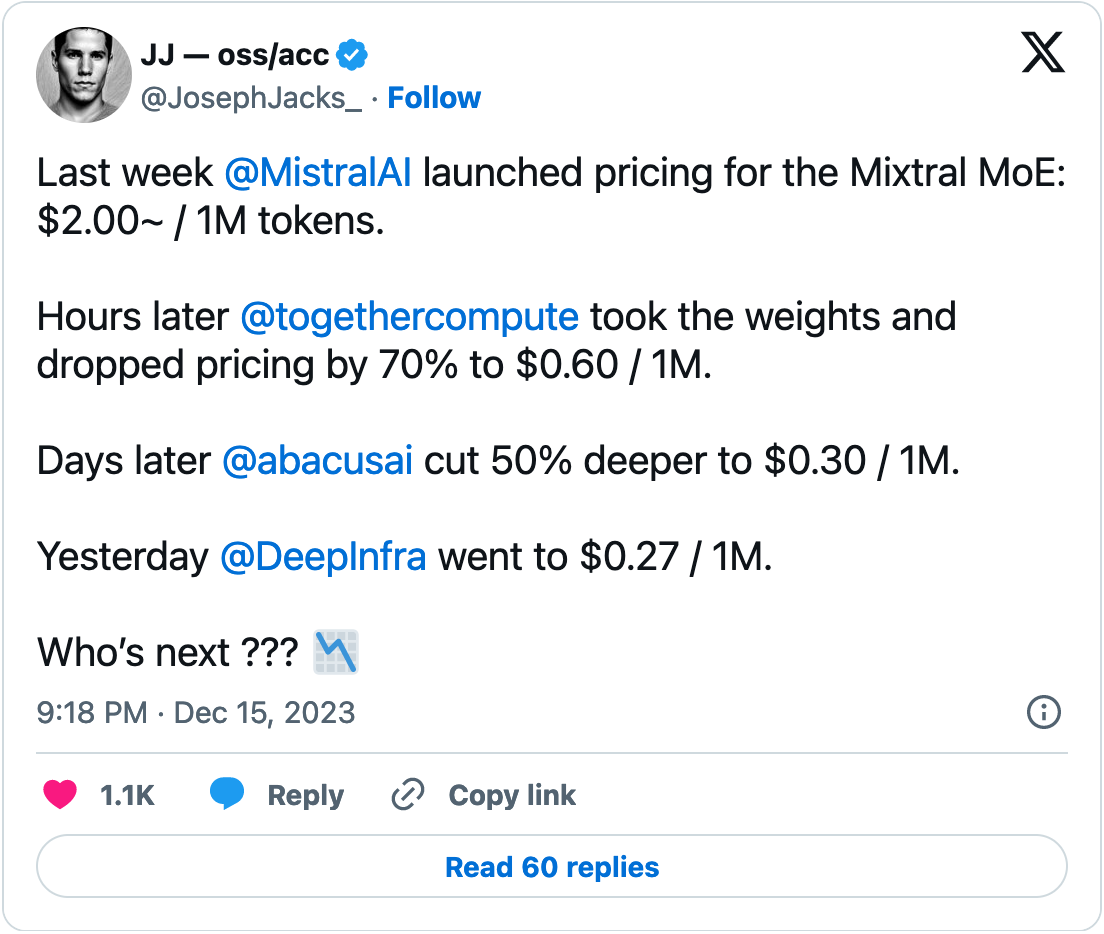

This year, OpenAI announced a 3X reduction in GPT-4 cost even as they massively increase the context window size; there is a race starting to try outperform NVIDIA GPUs with specialized transformers chips; open-source is catching up on performance with tiny models that run on your phone and architectural innovations such as mixture-of-experts reducing inference cost to ~25% for the same number of total parameters, as the 8x7B MoE model by Mistral. The race to the bottom is so intense that Google decided to provide Gemini Pro completely for free.

Considering that GPT-3.5, which launched the LLM race at the beginning of 2023 came with the cost of $2.00 / 1M tokens (advertised as $0.002 / 1K tokens) and Mixtral 8x7B at the end of 2023 is comparable or even better in performance, now costing $0.27 / 1M tokens at DeepInfra, this means a 86.5% reduction in cost just this year, an impressive feat. For contrast, to give an idea, IT equipment historically reduced “just” 23% per year on its best years.

LLM as the Kernel

Taking a step forward, some people, most notably Andrej Karpathy, came up with the conceptual idea of a LLM OS, having the LLM in very core just for it’s reasoning capabilities similar to a CPU or our brain, having other components connected to allow it to extend its limitations, such as giving it longer term memory, peripherals like audio and video, communication with the network and other LLMs and so on.

This has as its very core the idea that the LLM is capable of general reasoning to orchestrate all those elements, which is only true at a shallow level as of now, but as context windows and multi-modal capabilities expand, models become better and better at it, already controlling computers and mobile phones while doing all the reasoning to carry over a task.

Kernel or not, being at the core or at the edge of software, either way I cannot even begin to imagine the possibilities of what having an engine capable of reasoning in the creative hands of developers can lead to, and I’m starting this blog’s precisely to follow and write about it as it develops.

This Blog and Newsletter

Hello my name is Rogerio 👋

I’ve been a software engineer for 14 years now, I’ve started this blog to write on musings and developments of the Reasoning Engine through the lenses of a developer as it eats software. It doesn’t really matter if this will indeed be advanced by LLMs or if some better idea is going to replace it, but the fact is, we won’t go back from here, the journey ahead is exciting for builders, and I will be following it closely.

My goal is to understand how devs are using LLMs, how both the foundational technology and the libraries and techniques on top advances, for dev productivity but specially when embedded in the software itself, and what possibilities will be unlocked that we could never guess before.

Subscribe to the newsletter if you want to enjoy the ride too, and follow me on twitter if you prefer AI memes instead.